Linkage of Similar Code Snippets Assessed in the Micro Benchmark Service jsPerf

Authors – Kazuya Saiki and Akinori Ihara

Venue – 21st IEEE International Working Conference on Software Code Analysis and Manipulation

Preprint – TBA

Abstract – A benchmark is an action to assess performance (e.g., program execution time) by developers preparing and running several test cases over a long period. To reasonably assess the performance of method-level code snippets, developers could use a micro benchmark. Some micro benchmarks for JavaScript provide online web services (e.g., jsPerf and MeasureThat.net). Developers easily search code snippets with better performance in the micro benchmark service. Then, the developers will find many similar code snippets for different functions in the service because the micro benchmark service has a collection of versatile method-level code snippets. To find replaceable code snippets with better performance, we tackle to distinguish similar code snippets for different functions with more fine-grained size than method-level in micro benchmark services.This study proposes an approach to collect diverse code snippets using the similar function. The approach measures the similarity using Code2Vec between some code snippets assessed in the micro benchmark service, and find an appropriate threshold to associate with the code snippets using the similar function. Using the micro benchmark service jsPerf dataset that the authors collected, this study evaluates the usefulness of our approach. Specifically, we collect code snippets related to the most frequent topics “innerHTML vs removeChild” and “for vs forEach” assessed in jsPerf. Consequently, we find our approach achieves higher precision (98% and 92%) to identify diverse code snippets using the similar function.

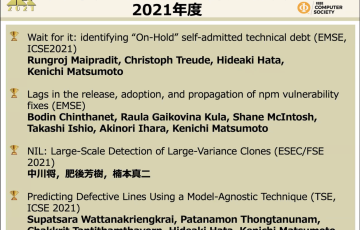

関連記事

[Web] 21st IEEE International Working Conference on Source Code Analysis and Manipulation

Coming soon!